ASL Hand Tracking

Overview

This is a demo project showing off a custom hand pose and gesture tracking system for American Sign Language on the Quest 2. This was developed in Unity over the course of a week and a half. I created a short proof of concept for a gesture-based spellcasting system where players might spell the name of a spell, "Ice" in this case, and then cast the spell.

I wanted to explore the challenges and benefits of being able to translate sign language in VR. This would give players who are hard of hearing or unable to speak the ability to communicate with other players who may not know sign language. Additionally, this could be extended into a tool to help others learn sign language. I hope to also use this tool in the future for other game projects. The possibilities of tracking specific hand gestures are endless!

This project uses the Oculus Integration package for unity for base hand tracking and passthrough.

Features

(Click to see more)

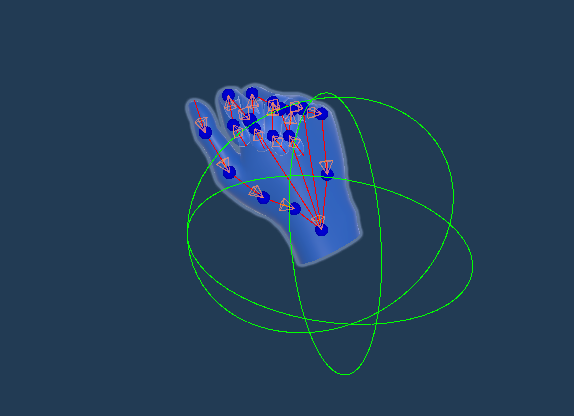

Pose Tool

A pose in this context is a static state of the hand at a point in time. This could be anything from a fist to a vulcan salute (what can I say, I love Star Trek). A huge hurdle would have been creating all of these poses manually so I knew I would need a tool to do so. I created a system that can reference a Unity prefab and output the current pose of a hand to that prefab. Then this can be referenced later at runtime to compare the position of the wrist (the green sphere) and the rotation of each joint (the orange frustums indicate their acceptance range). Since the poses are stored in prefabs, we can make slight adjustments manually in the editor as well.

Gestures

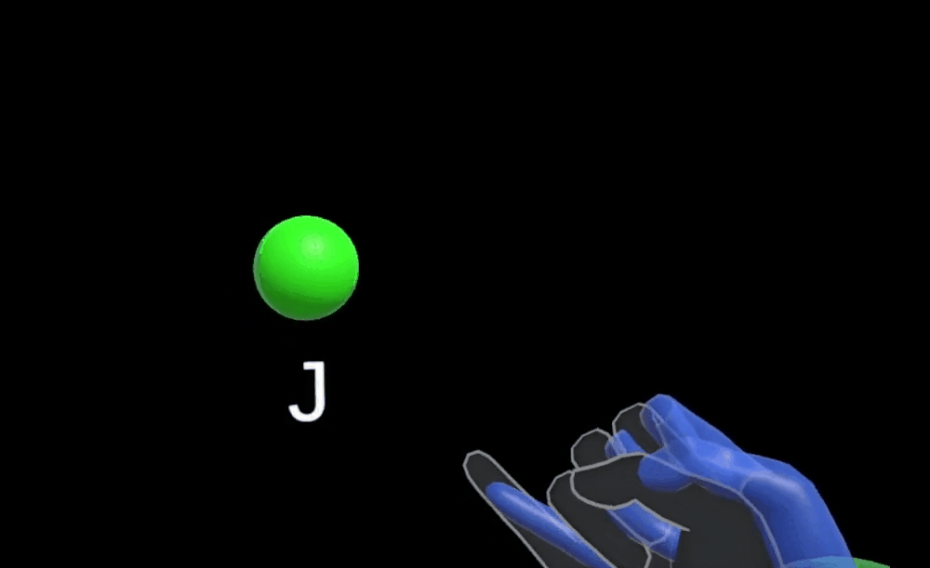

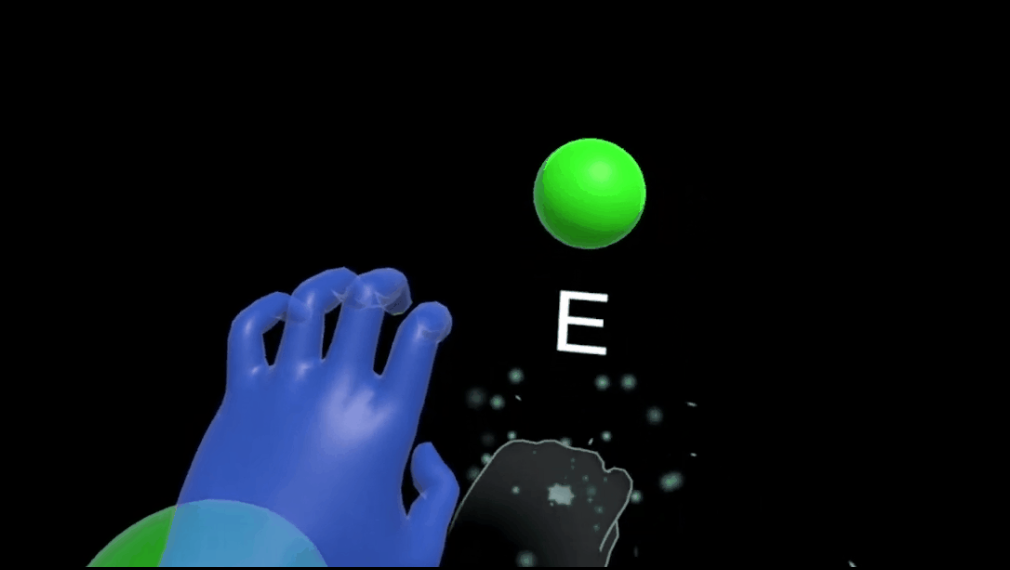

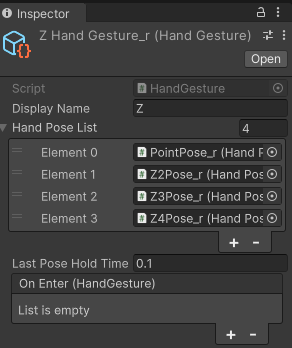

A lot of signs in ASL require motion. Among the letters alone, "J" and "Z" require multiple poses. I accomplished this by creating a "Gesture" that stores a list of static poses. If the most recent poses made by the players hand match any of the known gestures, then that gesture is accepted. This creates a simulation of motion where the system only cares that the poses are reached in a specific order. This is slightly less accurate but more performant.

The system checks the final pose in a gesture against the current hand first because there is no need to check the first pose if the last pose won't match. The last pose must be held for an adjustable amount of time for letters such as "I" which starts the same way as "J". I had to make sure the system didn't accidentally sign "I J" everytime the player signs "J".

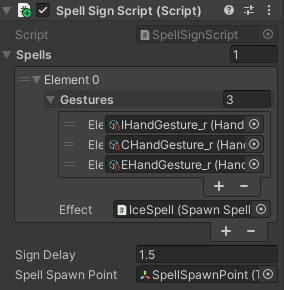

Spells

I wanted to have a proof of concept to show other ways this system can be applied. Once the framework is in place, spells are to gestures as gestures are to poses. We check to see if the order of the most recent gestures matches any of the known spells. Then we have a spell class that initiates some effect using a "SpellCast" function.

Tools

Unity

C#

Quest 2

Hand Tracking

Passthrough

Reflection

I was really excited for this project at the outset and I can't wait to continue working on it. Accessibility is vital to games because they are supposed to be a place where everyone can come together. Most VR games use verbal voice chat to communicate so anyone unable to speak or hear has no way to quickly communicate. This would have to be combined with a voice-to-text solution to be completely effective at allowing someone who knows ASL to communicate with someone who does not.

It was a very fun puzzle to work with and can still use some improvements and optimizations. I want to create a more effective GUI based tool for creating and modifying hand poses. Additionally, I want to expand on my proof of concept and create a gesture-based magic game using hand-tracking. We've seen this done using controllers, but learning specific hand movements would breath life into the game and reinforce the feeling that players are learning a skill. If possible, I would like to continue with sign language as the base, potentially making the game educational at the same time. The only issue with trying to track ASL signs is the current limitations of hand tracking.

Some of the difficulties I ran into during this project are simply unsolvable with only a Quest 2 and hand tracking. Most signs are directed away from the user which means that the hand sometimes occludes the fingers. This was mitigated using tolerance angles on the finger joints, but signs such as P and Q still struggle since the middle, ring, and pinky finger are completely hidden. This could be solved theoretically with more cameras such as lighthouses, but not with a single Quest 2 headset.

Another issue is the limitation of the software tracking specific hand gestures. Currently, hand tracking can not detect crossed fingers or if the thumb is under other fingers. This means that letter such as M, N, and T look the same to the tracking system as S (similar to a fist) and the letter R (crossed fingers) looks like the letter U (index and middle finger side by side like holding up two fingers). Meta is currently on the frontlines of hand tracking so there is no guarantee a system exists out there that can support these motions.